If 2024 was the year everyone talked about AI agents, 2025 was the year every cloud platform shipped a button for building one.

Microsoft released Copilot Studio and Azure AI Foundry. Google launched Vertex AI Agent Builder with its Agent Development Kit. AWS expanded Amazon Bedrock Agents. Salesforce doubled down on Agentforce. Each platform promises roughly the same thing: upload your documents, write a prompt, pick a model, enable some tools — and you have an AI agent.

The barrier to entry dropped from "hire an ML team" to "open a browser tab." But here's what nobody in the marketing slides mentions: the distance between a configurable agent that answers FAQs and a production agent that handles real business processes is enormous. It's the difference between assembling IKEA furniture and building a house.

This post traces the full arc — from the earliest chatbots to today's configurable platforms — and examines what these tools actually deliver, what still requires custom engineering, and where the industry is likely headed.

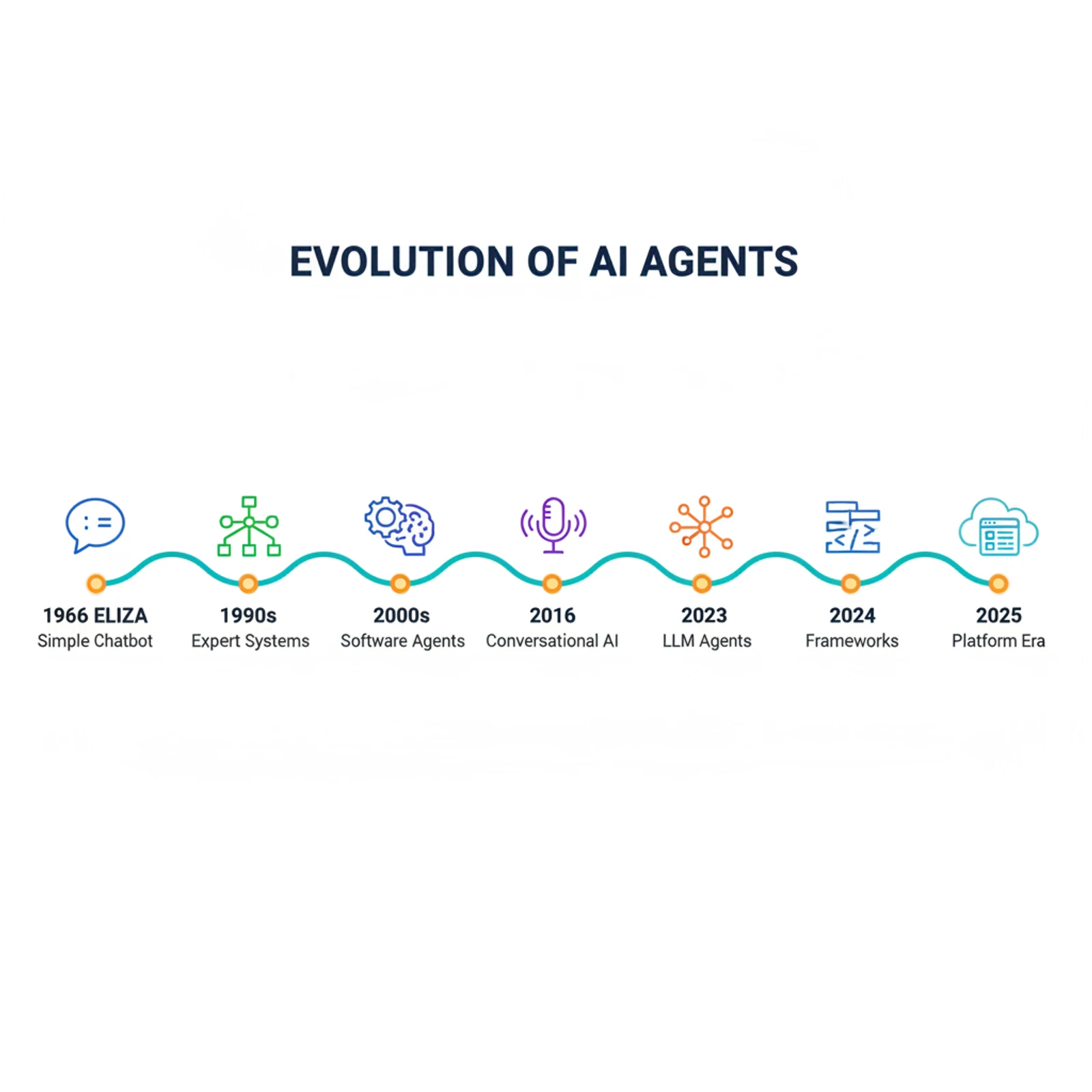

A Brief History of Agents: From ELIZA to Autonomous Systems

The idea of software that acts on your behalf is older than most people realize.

| Year | Milestone | What It Did |

|---|---|---|

| 1966 | ELIZA | Pattern-matched keywords to simulate a therapist. No understanding, just string replacement. |

| 1990s | Expert Systems | Rule-based agents (MYCIN, CLIPS) that encoded domain knowledge as if-then chains. Brittle but useful in narrow domains. |

| 2000s | Software Agents | BDI (Belief-Desire-Intention) architecture. Agents had goals, planned actions, and adapted to environments. Mostly academic. |

| 2016 | Conversational AI | Siri, Alexa, Google Assistant — voice-activated agents with limited tool use. Could set timers and play music, not much else. |

| 2023 | LLM-Powered Agents | AutoGPT, BabyAGI, GPT-4 with function calling. The first wave of agents that could reason, plan, and use tools. |

| 2024 | Framework Explosion | CrewAI, LangGraph, AutoGen, Semantic Kernel — proper agent frameworks with memory, tool orchestration, and multi-agent support. |

| 2025 | Platform Era | Every cloud vendor ships configurable agent builders. The "no-code agent" becomes a product category. |

The key inflection point was when large language models gained function calling capabilities in mid-2023. Before that, agents could only generate text. After it, they could take actions — search the web, query databases, call APIs, write files. That single capability turned chatbots into something qualitatively different.

How Modern AI Agents Actually Work

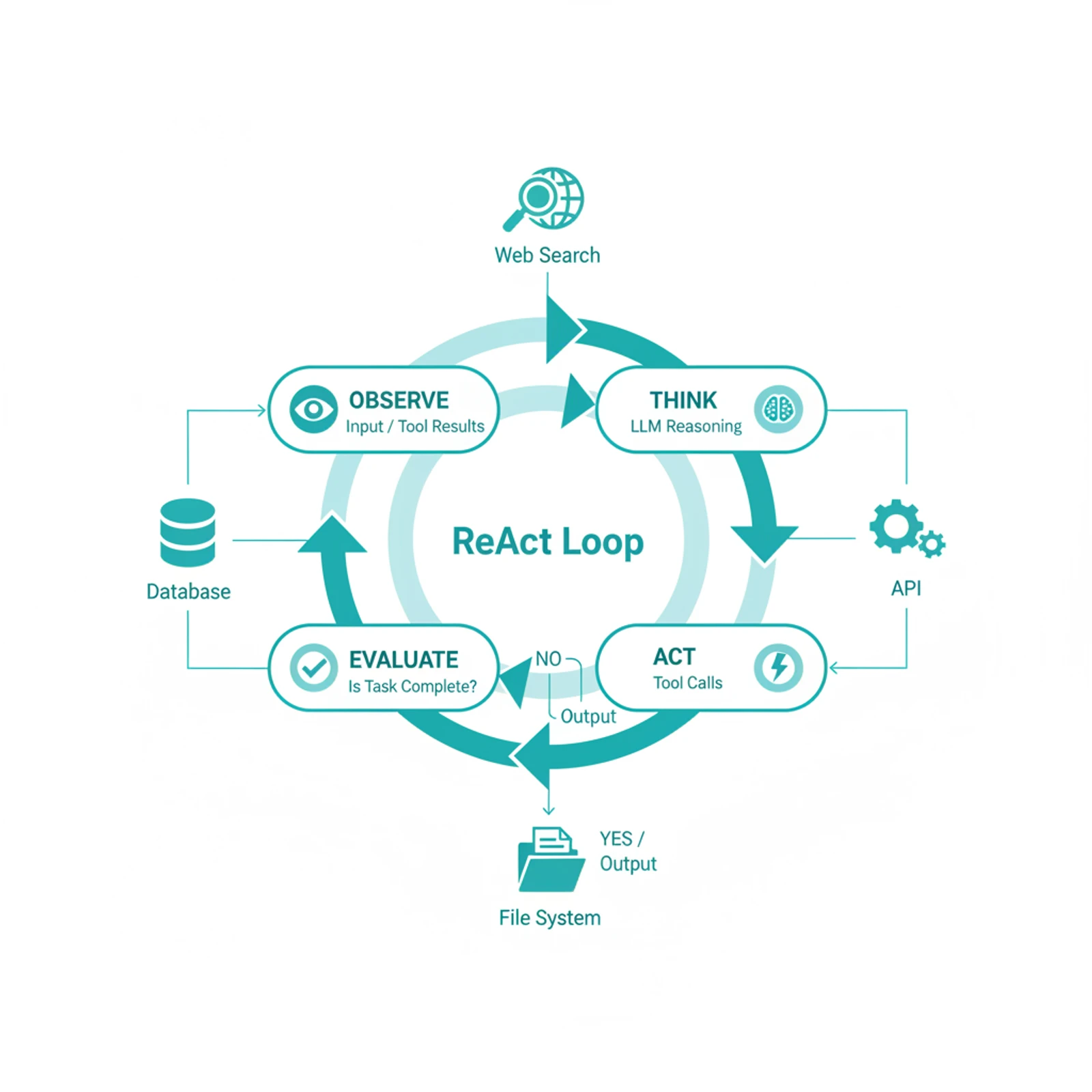

Before evaluating the platforms, it helps to understand what's happening under the hood. Most LLM-based agents follow a pattern called ReAct (Reasoning + Acting), introduced by Yao et al. in 2022.

The loop is simple:

- Observe: The agent receives a task or new information

- Think: The LLM reasons about what to do next (this is the "chain of thought")

- Act: The agent calls a tool — a web search, a database query, an API call

- Observe: The tool returns results

- Think: The LLM evaluates the results and decides whether the task is complete

- Repeat until done or a limit is reached

This is the core mechanism behind every agent you've seen demoed on stage. The differences between platforms come down to how they implement the surrounding infrastructure: memory management, tool orchestration, error recovery, guardrails, and multi-agent coordination.

Here's what a single agent turn looks like in practice:

User: "What's the status of our Q4 revenue target?"

Agent thinks: I need to check the revenue dashboard. Let me query the database.

Agent acts: [calls SQL tool] SELECT actual, target FROM revenue WHERE quarter = 'Q4 2025'

Agent observes: actual = $4.2M, target = $5.0M

Agent thinks: We're at 84% of target. Let me check the trend.

Agent acts: [calls SQL tool] SELECT month, actual FROM revenue WHERE quarter = 'Q4 2025' ORDER BY month

Agent observes: Oct: $1.2M, Nov: $1.4M, Dec: $1.6M

Agent thinks: Revenue is trending up month-over-month. I can now answer the user.

Agent responds: "Q4 revenue is at $4.2M against a $5.0M target (84%).

The trend is positive — each month grew over the prior one,

with December at $1.6M. At current trajectory, Q1 may start strong."The magic isn't in any single step. It's in the LLM's ability to decide which tools to call, interpret the results, and compose a coherent response. The failure modes are also here — if the agent picks the wrong tool, misinterprets results, or gets stuck in a loop, the whole thing falls apart.

The Configurable Agent Platforms: What They Actually Offer

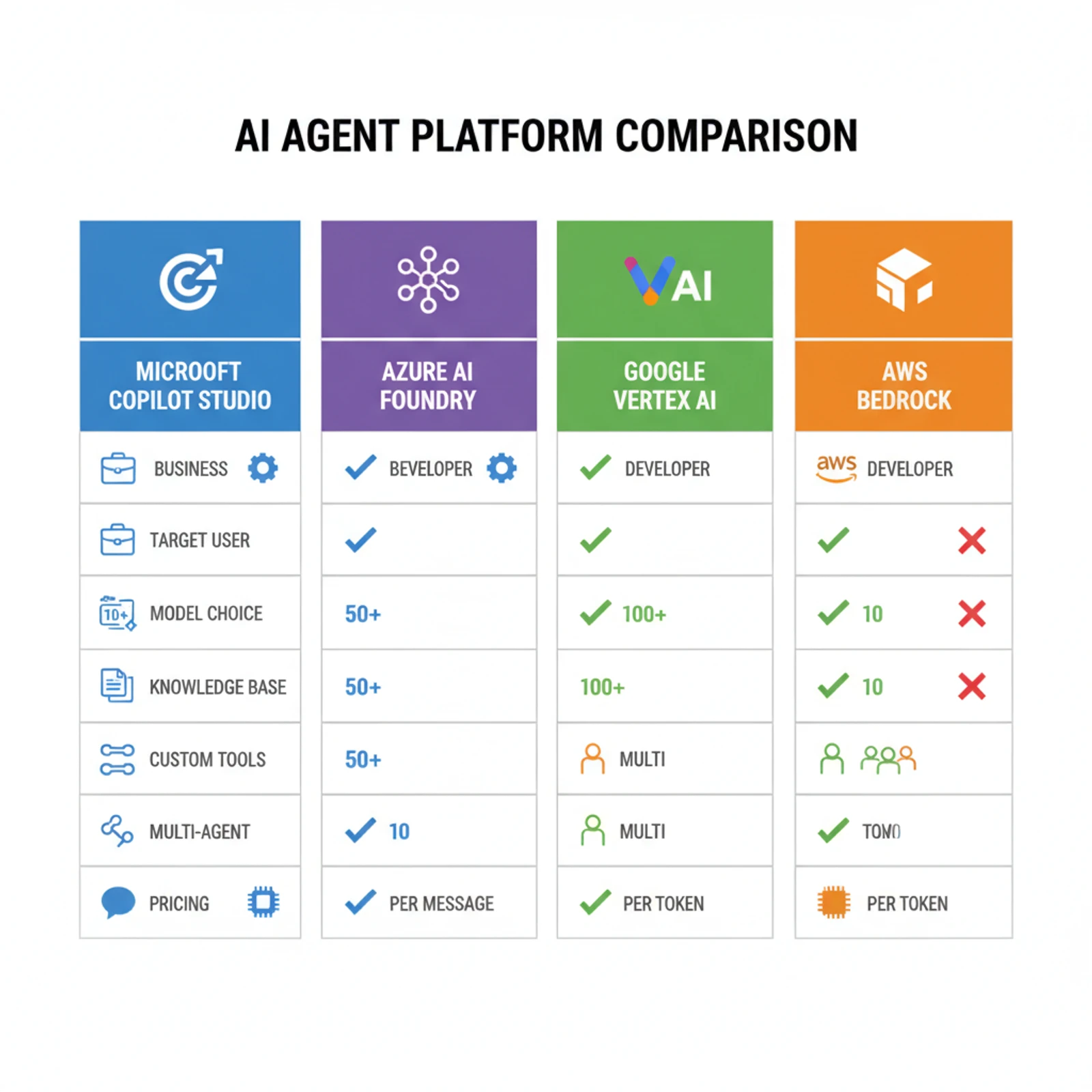

Let's look at the three major platforms and what they give you out of the box.

Microsoft Copilot Studio + Azure AI Foundry

Microsoft has two complementary products. Copilot Studio is the no-code/low-code builder aimed at business users. Azure AI Foundry (formerly Azure AI Studio) is the developer-oriented platform for more complex agents.

Copilot Studio lets you:

- Create agents through a visual canvas — drag topics, add conditions, connect actions

- Upload knowledge documents (PDF, Word, Excel, web pages)

- Connect to Microsoft 365 data (SharePoint, Teams, Outlook)

- Deploy to Teams, websites, or custom channels

- Use prebuilt connectors for Salesforce, ServiceNow, SAP, and 1,200+ services via Power Platform

Azure AI Foundry gives you:

- Fine-grained model selection (GPT-4o, GPT-4o-mini, Phi, Llama, Mistral, or your own fine-tuned model)

- Temperature, top-K, top-P, and token limit controls

- Grounding with Azure AI Search (vector + semantic search over your data)

- Multi-agent orchestration with Semantic Kernel or AutoGen

- Content safety filters with customizable severity thresholds

- Evaluation pipelines to test agent quality before deployment

The gap between the two is significant. Copilot Studio is where a marketing manager builds a FAQ bot in an afternoon. AI Foundry is where an engineering team builds a claims processing agent over several months.

Google Vertex AI Agent Builder + ADK

Google's Agent Builder lives inside Vertex AI and integrates tightly with the Google Cloud ecosystem.

Key capabilities:

- Agent creation with natural language instructions or code (Python SDK)

- Grounding with Google Search, Vertex AI Search, or custom data stores

- Tool use via OpenAPI specs, Cloud Functions, or custom code

- Agent Development Kit (ADK) for building multi-agent systems

- A2A (Agent-to-Agent) protocol for inter-agent communication

- Integration with BigQuery, Cloud Storage, and Google Workspace

- Session-based memory management

Google's ADK is particularly interesting. Released as open-source, it lets you define agents as Python classes with tools, orchestrate multiple agents, and deploy them as Cloud Run services. The A2A protocol (announced April 2025) defines a standard for agents built on different platforms to communicate with each other.

AWS Amazon Bedrock Agents

Amazon's approach leans heavily on its existing infrastructure:

- Choose from Anthropic Claude, Meta Llama, Amazon Titan, and others

- Define agent actions as Lambda functions

- Knowledge bases backed by Amazon OpenSearch, Aurora, or Pinecone

- Guardrails for content filtering and PII redaction

- Step-by-step agent tracing for debugging

- Integration with S3, DynamoDB, and other AWS services

Comparison at a glance:

| Feature | Copilot Studio | AI Foundry | Vertex AI | Bedrock |

|---|---|---|---|---|

| Target User | Business users | Developers | Developers | Developers |

| No-Code Builder | Yes | No | Partial | No |

| Model Choice | GPT-4o family | 30+ models | Gemini + partners | Claude, Llama, Titan |

| Knowledge Base | SharePoint, web | Azure AI Search | Vertex AI Search | OpenSearch, Aurora |

| Custom Tools | Power Automate | Functions, APIs | Cloud Functions | Lambda |

| Multi-Agent | Limited | AutoGen/Semantic Kernel | ADK + A2A | Limited |

| Evaluation | Basic analytics | Built-in eval pipelines | Vertex AI Evaluation | Traces + CloudWatch |

| Pricing Model | Per message | Per token + infra | Per token + infra | Per token + infra |

The Frameworks Behind the Curtain

The configurable platforms don't exist in a vacuum. They're built on top of — or compete with — a set of open-source agent frameworks that do the heavy lifting.

LangChain / LangGraph

The most widely adopted agent framework. LangChain provides the building blocks — model wrappers, tool interfaces, memory systems, retrieval chains. LangGraph (introduced in 2024) adds stateful, graph-based orchestration for multi-step agents.

- Strengths: Massive ecosystem, extensive integrations (700+ components), strong community

- Weakness: Abstraction layers can be opaque; debugging agent behavior requires understanding multiple layers

- Use case: When you need maximum flexibility and don't mind complexity

CrewAI

A framework specifically designed for multi-agent systems. You define "crews" of agents, each with a role, goal, and set of tools. The agents collaborate on tasks with defined delegation patterns.

- Strengths: Intuitive multi-agent design, role-based architecture, good for structured workflows

- Weakness: Less flexible than LangGraph for non-standard patterns

- Use case: When your problem naturally decomposes into specialized roles (researcher, writer, reviewer)

LlamaIndex

Originally built for RAG (Retrieval-Augmented Generation), LlamaIndex has evolved into a full agent framework with its "Workflows" system.

- Strengths: Best-in-class data ingestion and retrieval, 160+ data connectors, strong for knowledge-heavy agents

- Weakness: Agent capabilities are newer and less mature than LangChain's

- Use case: When your agent primarily needs to reason over large document collections

AutoGen (Microsoft) / Semantic Kernel

Microsoft's own frameworks. AutoGen specializes in multi-agent conversations where agents debate and refine responses. Semantic Kernel is more of a traditional orchestration framework that integrates with Azure services.

- Strengths: Deep Microsoft ecosystem integration, good for enterprise patterns

- Weakness: Smaller community than LangChain, tighter coupling to Azure

- Use case: Enterprise agents within the Microsoft stack

How they relate to the platforms: Azure AI Foundry uses Semantic Kernel and AutoGen internally. Google's ADK is a standalone framework but competes in the same space. AWS Bedrock's agent runtime is proprietary. Copilot Studio doesn't expose any of this — it's all hidden behind the visual builder.

Where Configurable Agents Work (And Where They Don't)

Based on what I've seen building and evaluating agents across these platforms, here's an honest assessment.

Configurable agents work well for:

Internal FAQ and knowledge retrieval. Upload your employee handbook, IT policies, and product documentation. Let people ask questions in natural language instead of searching through SharePoint. This is the sweet spot — the failure cost is low, the value is immediate, and the knowledge base is bounded.

Simple task automation. "When someone submits a support ticket, categorize it and route it to the right team." The platforms handle this well because the decision tree is relatively shallow and the actions are well-defined.

Customer-facing Q&A with guardrails. Product information bots, appointment schedulers, order status checkers. When the scope is narrow and the data is structured, configurable agents are genuinely useful.

Configurable agents struggle with:

Multi-step reasoning across systems. "Analyze our Q4 sales decline, cross-reference with marketing spend changes, check competitor pricing data, and recommend a strategy." This requires the agent to maintain context across many tool calls, handle partial failures, and synthesize information from heterogeneous sources. Configurable platforms break down here.

Ambiguous or open-ended tasks. "Help me prepare for tomorrow's board meeting." The agent needs to understand what you typically present, what's changed since last quarter, and what questions the board is likely to ask. This requires deep organizational context that no knowledge base upload provides.

Processes with real consequences. Approving invoices, modifying customer records, executing trades. The error cost is high, and configurable agents don't have the reliability guarantees you need. A 95% accuracy rate sounds good until you realize it means 1 in 20 transactions might be wrong.

Evolving requirements. Business processes change. When the agent needs to adapt its behavior based on new policies, edge cases, or organizational changes, you need code — not configuration.

The pattern is clear: configurable agents handle the 80% case well. The remaining 20% — which is often where the actual business value lives — requires custom engineering.

Building Production Agents: What the Platforms Don't Tell You

If you've worked with AI agents beyond demos, you know the real challenges aren't in the "build an agent" step. They're in everything that comes after.

Memory Management

LLMs have finite context windows. A conversational agent that handles 50 messages hits the limit quickly when each tool call adds context. Production agents need:

- Conversation summarization (compress old context without losing key facts)

- Working memory vs. long-term memory separation

- Selective retrieval (only load relevant context for the current task)

No configurable platform handles this automatically. They either truncate context (losing information) or fail when the window fills up.

Error Recovery

Agents fail. Tools return errors. APIs time out. The LLM misinterprets a result and spirals. Production agents need:

- Retry logic with exponential backoff

- Fallback tool selections

- Human-in-the-loop escalation when confidence is low

- Circuit breakers to prevent infinite loops

The platforms offer basic error handling, but real recovery logic requires custom code.

Evaluation and Testing

How do you know your agent works? Not just for the demo scenario — for the thousands of edge cases your users will throw at it. Production agent evaluation requires:

- Golden datasets of expected input-output pairs

- Automated regression testing when you change prompts or models

- Cost monitoring (LLM calls add up fast in agentic loops)

- Latency tracking (multi-step agents can take 30+ seconds per response)

Azure AI Foundry's evaluation pipelines are the most mature here, but even they require significant setup. Most teams are still evaluating agents manually — which doesn't scale.

Security and Governance

The AI agent security landscape is still immature. Key concerns:

- Prompt injection: Malicious content in documents or tool results that hijacks the agent's behavior

- Data leakage: The agent accidentally includes sensitive information in responses

- Privilege escalation: The agent takes actions beyond its intended scope

- Audit trails: Regulators want to know exactly what the agent did and why

Every platform has some guardrails, but the threat model for agents is fundamentally different from traditional software. An agent that can call arbitrary tools with natural language instructions has an attack surface that security teams are still learning to assess.

The Multi-Agent Future: Coordination Is the Hard Problem

The next frontier is multi-agent systems — agents that collaborate, delegate, and check each other's work. Every platform is investing here:

- Microsoft: AutoGen framework, Magnetic-One (general-purpose multi-agent team)

- Google: ADK with A2A protocol for cross-platform agent communication

- Anthropic: Claude Code's agent teams with shared task lists and messaging

- Open source: CrewAI, LangGraph's multi-agent patterns, MetaGPT

The architecture patterns emerging for multi-agent systems mirror human organizational design:

| Pattern | How It Works | Best For |

|---|---|---|

| Hierarchical | A lead agent delegates to specialist agents | Complex analysis, document processing |

| Peer-to-Peer | Agents communicate directly with shared state | Brainstorming, consensus building |

| Pipeline | Output of one agent feeds the next | Content creation, data transformation |

| Debate | Multiple agents argue positions, a judge decides | Decision support, risk assessment |

Multi-agent systems produce richer output but cost more (each agent is a separate LLM context), take longer (coordination overhead), and are harder to debug (failure cascades across agents). Gartner predicts that 40% of agentic AI projects will fail by 2027 due to complexity management, not technical limitations.

The interesting question isn't whether multi-agent systems work — they clearly do for specific use cases. It's whether the coordination overhead is worth the quality improvement. For most business applications today, a single well-designed agent with good tools outperforms a poorly coordinated team of agents.

The Standards That Will Shape the Next Phase

Two protocols deserve attention because they'll likely define how agents interact with the broader ecosystem:

Model Context Protocol (MCP) by Anthropic. An open standard for connecting AI models to external tools and data sources. Think of it as USB-C for AI agents — a universal interface that means tools built once work with any MCP-compatible agent. Adoption is growing fast, with Claude, OpenAI, and multiple open-source frameworks supporting it.

Agent-to-Agent Protocol (A2A) by Google. An open standard for agents built on different platforms to communicate with each other. A Copilot Studio agent could theoretically collaborate with a Vertex AI agent through A2A. This is still early, but it addresses a real problem: enterprise environments rarely standardize on a single vendor.

If both protocols achieve broad adoption, the agent ecosystem could evolve like the web did — from proprietary platforms to interoperable services. That would dramatically change the build-vs-buy calculation.

What I'd Actually Recommend

If you're evaluating AI agents for your organization, here's a practical framework:

Start with configurable platforms when:

- The use case is well-defined with clear boundaries

- The primary function is knowledge retrieval or simple task routing

- You need a working prototype in days, not months

- The failure cost is low (wrong answer = inconvenience, not financial loss)

Move to custom frameworks when:

- The agent needs to orchestrate multiple systems

- Business logic requires conditional flows that change over time

- Error recovery must be sophisticated

- You need rigorous evaluation and testing

- Security and compliance requirements are strict

Skip agents entirely when:

- A traditional API integration solves the problem

- The process is too critical for probabilistic decision-making

- You don't have the data infrastructure to ground the agent

- The ROI doesn't justify the ongoing LLM costs

The agent landscape in early 2026 reminds me of the cloud platform wars circa 2012. Everyone's building, the tooling is improving fast, standards are emerging, and the early adopters are learning expensive lessons that will benefit everyone who comes after them. The platforms will get better. The frameworks will mature. The standards will stabilize.

But right now, the gap between what's demonstrated on stage and what works in production is real. The companies that succeed with agents are the ones that start with bounded, high-value use cases and expand incrementally — not the ones that try to build an "autonomous AI workforce" on day one.

Wrapping Up

Configurable AI agent platforms have genuinely lowered the barrier to building agents. A business analyst can now create a functional knowledge assistant in an afternoon. That's remarkable progress.

But "configurable" and "production-ready" are different things. The platforms handle the easy parts — model selection, basic tool integration, simple conversation flows. The hard parts — memory management, error recovery, multi-system orchestration, security, evaluation — still require engineering.

The right approach isn't to choose between configurable and custom. It's to use configurable platforms for what they're good at (rapid prototyping, simple use cases, internal tools) and invest in custom engineering where the business value justifies it (complex workflows, customer-facing systems, high-stakes decisions).

The agent revolution is real. The no-code part? That's mostly marketing.

Sources: Microsoft Learn (Copilot Studio, AI Foundry documentation), Google Cloud (Vertex AI Agent Builder, ADK documentation), AWS (Amazon Bedrock Agents), LangChain documentation, CrewAI documentation, Gartner AI Agent Market Analysis 2025, Deloitte AI Agent Trends Report 2025