It starts with a simple question. Someone on the leadership team asks: "How many customers do we actually have?"

You'd think this would be easy. Pull a count from the database. But then you discover that the CRM says 45,000, the billing system says 52,000, the marketing platform says 61,000, and the support desk says 38,000. Same company. Same customers. Four different numbers. Nobody knows which one is right — because none of them are.

If you've worked in data long enough, you've lived this moment. It's the moment that launches a million-dollar procurement process, a two-year implementation timeline, and — if history is any guide — a very high chance of failure.

Welcome to Master Data Management.

A Brief History of Wanting One Source of Truth

The story of MDM begins in the 1990s, though nobody called it that yet. Organizations were drowning in fragmented information systems. Customer records lived in dozens of places — ERP, CRM, billing, support tickets, spreadsheets taped to monitors. The term "data silo" didn't exist because silos were just... how things worked.

The first generation of solutions appeared in the early 2000s. They were straightforward: build a centralized repository, use ETL tools to pull data from source systems, clean it up, and create a single unified record. The "golden record," as it came to be known — the one true version of each customer, product, or supplier.

It was a good idea. It was also a nightmare to implement.

By the mid-2000s, the industry realized that the problem wasn't just technical — it was organizational. Data quality wasn't an IT problem. It was a people problem. You needed business stakeholders to agree on definitions. What even is a "customer"? Is it someone who made a purchase? Someone who has an account? Someone who once clicked an ad? The answer depends on who you ask, and nobody agrees.

This era gave birth to formal data governance: Chief Data Officers, Data Stewards, governance councils, policy frameworks. The second generation of MDM shifted focus from pure technology to business process. The tools got more sophisticated. The projects got more expensive. And the failure rates stayed stubbornly high.

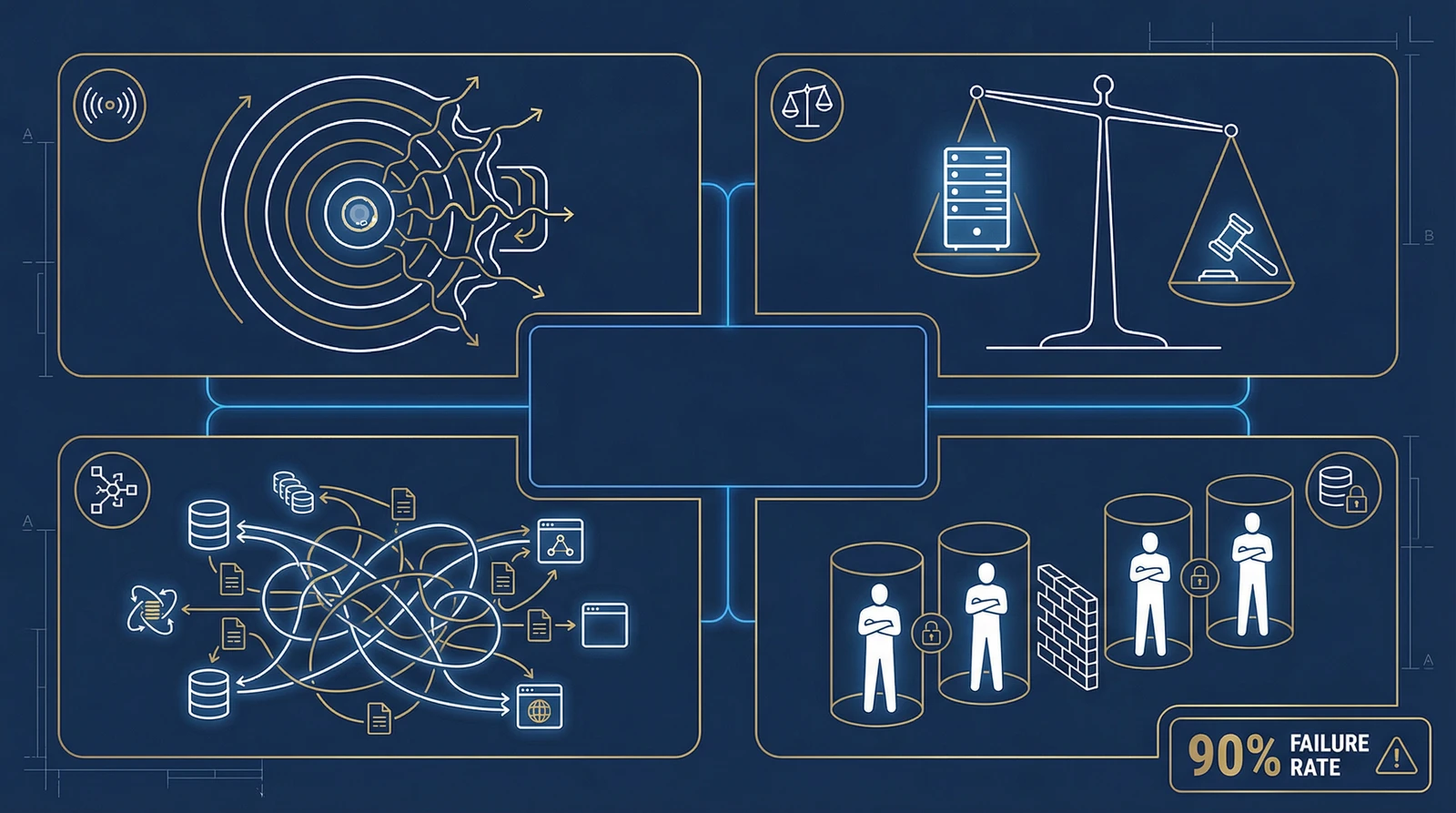

The Uncomfortable Truth: A 90% Failure Rate

Let's talk about the number that the MDM vendor brochures don't put on the front page.

Gartner has noted that around 90% of businesses fail when first attempting to implement and maintain an MDM project. That's not a typo. Nine out of ten.

A 2011 survey of 192 large organizations found that only 24% described their MDM projects as "successful or better." And 90% of organizations fail to even collect KPIs for their MDM programs — which means they can't tell whether it's working because they never defined what "working" means.

Why do so many projects fail? The patterns are consistent:

Scope creep disguised as ambition. An MDM project starts with "let's unify customer data" and expands to "let's also do products, suppliers, locations, reference data, and maybe build a data quality engine while we're at it." Each addition doubles the complexity.

Technology over governance. Organizations buy a platform and expect it to solve the problem. But if your business units can't agree on what a "customer" is, no software will fix that. The tool becomes an expensive database that nobody trusts.

The integration tax. Legacy systems don't give up their data gracefully. Incompatible data models, proprietary formats, undocumented APIs — connecting 20 source systems is an engineering marathon, not a sprint.

Organizational resistance. Data stewardship requires people to change how they work. It requires ownership. It requires accountability. Most organizations underestimate how hard it is to change human behavior — far harder than changing technology.

The result? Consultants who specialize in MDM often get called in to clean up after a failed first attempt. "Attempt number X" is a common phrase in this industry.

What You Actually Need: The Golden Record Question

Here's the thing that gets lost in the MDM hype. When you strip away the governance frameworks, the stewardship councils, the data quality engines, the reference data management, and the multi-domain orchestration — what do most organizations actually want?

A golden record.

They want to look at their customer data and see one record per customer. They want "John Smith" at "123 Main St" in the CRM to be recognized as the same person as "J. Smith" at "123 Main Street" in the billing system. They want to know that when they count customers, they get one number. The right number.

That's it. That's the 80% use case.

Most enterprises already have their own quality management processes. They have their own reporting tools. They have their own governance structures — maybe informal, maybe imperfect, but functional. What they're missing is the matching and merging. The entity resolution. The golden record.

And here's where the build-vs-buy question gets interesting. Because if all you need is entity resolution and golden record creation, you're looking at one specific technical problem — not the full cathedral that MDM vendors are selling.

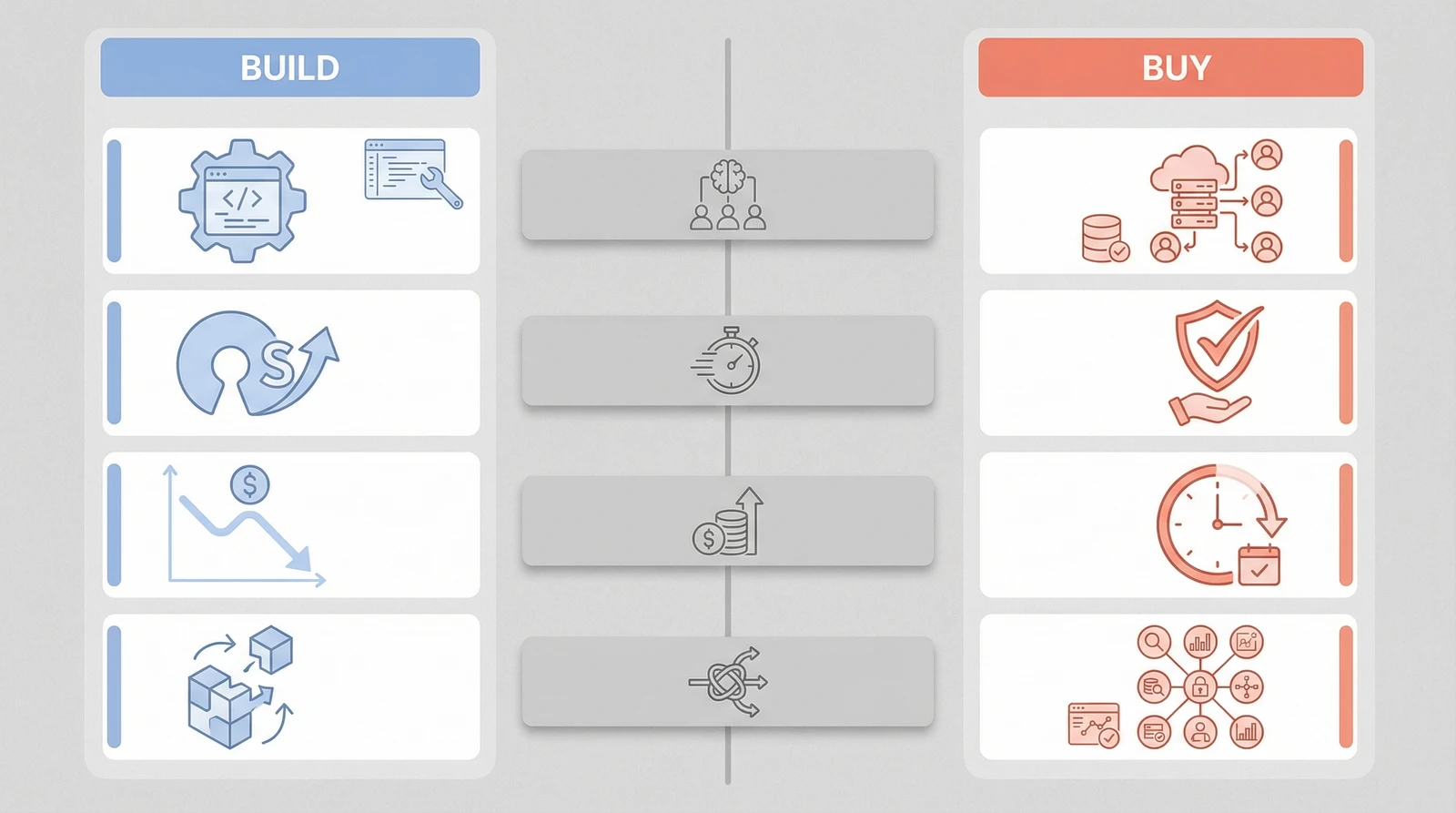

The Buy Side: What You're Actually Paying For

The MDM market is projected to grow from $19.24 billion in 2025 to $94.08 billion by 2035. That's a lot of money flowing to vendors. Let's look at what it buys.

The major players — Informatica, Reltio, Profisee, Stibo Systems, SAP, IBM — offer comprehensive platforms. They handle matching, merging, survivorship rules, data stewardship workflows, data quality, reference data, hierarchy management, and cross-domain governance. These are mature, proven systems backed by decades of engineering.

But "comprehensive" comes with baggage:

Cost. Affordable options like Profisee and Semarchy start in the tens of thousands of dollars annually. Enterprise solutions from Informatica or SAP can run into hundreds of thousands — or millions — per year. And that's just licensing. Implementation, consulting, and training multiply the number.

Learning curve. These platforms are complex. Informatica, despite its capabilities, is widely described as complex to purchase and configure. Reltio locks you into vendor-hosted SaaS, limiting customization. Even the "user-friendly" options require specialized knowledge to implement effectively.

Yearly subscription model. You're renting, not owning. Stop paying and you lose access. This creates a long-term cost commitment that compounds over time — and switching vendors is expensive enough that you're effectively locked in.

Overkill for the use case. If you need a golden record and you buy a full MDM platform, you're buying a Swiss Army knife when you need a screwdriver. You'll configure maybe 30% of the features and maintain 100% of the complexity.

When does buying make sense? When you have genuine multi-domain needs (customer AND product AND supplier master data), when you need complex stewardship workflows with multiple approval levels, when regulatory compliance demands audit trails that a custom solution can't easily provide, or when you simply don't have the engineering team to build and maintain a solution.

The Build Side: What's Changed

Five years ago, "build your own MDM" meant writing thousands of lines of custom matching logic, implementing fuzzy string algorithms from scratch, and maintaining a fragile pipeline that broke every time someone changed a source schema. It was genuinely painful. Most people who tried it wished they'd bought a platform instead.

That landscape has shifted dramatically.

Open-source entity resolution has matured. Zingg, an ML-based entity resolution tool, has become a credible alternative to commercial matching engines. It learns patterns from labeled data, builds a blocking model that indexes near-similar records (comparing only 0.05-1% of the possible problem space instead of every record against every other), and outputs matched clusters ready for merging. It runs natively on Spark and integrates directly with your data lakehouse.

Splink, from the UK's Ministry of Justice, takes a Fellegi-Sunter probabilistic approach with SQL or Spark backends. It's battle-tested on government-scale datasets and completely open source.

Modern data platforms have native capabilities. Databricks now supports entity resolution through marketplace offerings from Dun & Bradstreet and Experian. AWS launched Entity Resolution as a managed service. You can implement the core matching and merging logic within your existing data platform — no separate MDM system required.

dbt and the composable data stack. Data quality, transformation, and testing frameworks have become modular and composable. You can build survivorship rules as dbt models, data quality checks as dbt tests, and golden record outputs as materialized tables. It's version-controlled, testable, and runs in your existing infrastructure.

The cost differential is real. An open-source Zingg implementation on your existing Spark cluster costs engineering time. A commercial MDM platform costs engineering time plus $50K-$500K per year in licensing. Over five years, that's a meaningful difference — especially for organizations that only need the golden record capability.

A Practical Framework: Which Path Fits?

Not every organization should build. Not every organization should buy. Here's how I'd think about the decision:

Build if:

- Your primary need is golden record / entity resolution

- You have a competent data engineering team

- You're already invested in a modern data stack (Databricks, Snowflake, etc.)

- Your data domains are limited (mainly customer or product, not both)

- You have existing data quality processes that work

- Cost sensitivity is high and you're thinking in multi-year horizons

Buy if:

- You need multi-domain MDM with complex cross-domain relationships

- Regulatory requirements demand formal stewardship workflows with audit trails

- You lack the engineering team to build and maintain a custom solution

- You need to solve the problem quickly (6-12 months, not 18-24)

- Your organization needs the structure that a platform imposes

- Data governance is immature and you need the tool to drive the process

Hybrid approach (often the smartest):

- Use open-source tools for matching and merging (the golden record engine)

- Layer in lightweight governance processes tailored to your organization

- Leverage your existing data platform for storage, orchestration, and serving

- Add commercial components only where open source falls short (e.g., address verification, enrichment)

The hybrid path respects a fundamental truth: you probably don't need 80% of what a commercial MDM platform offers. But the 20% you do need is critical and needs to work well.

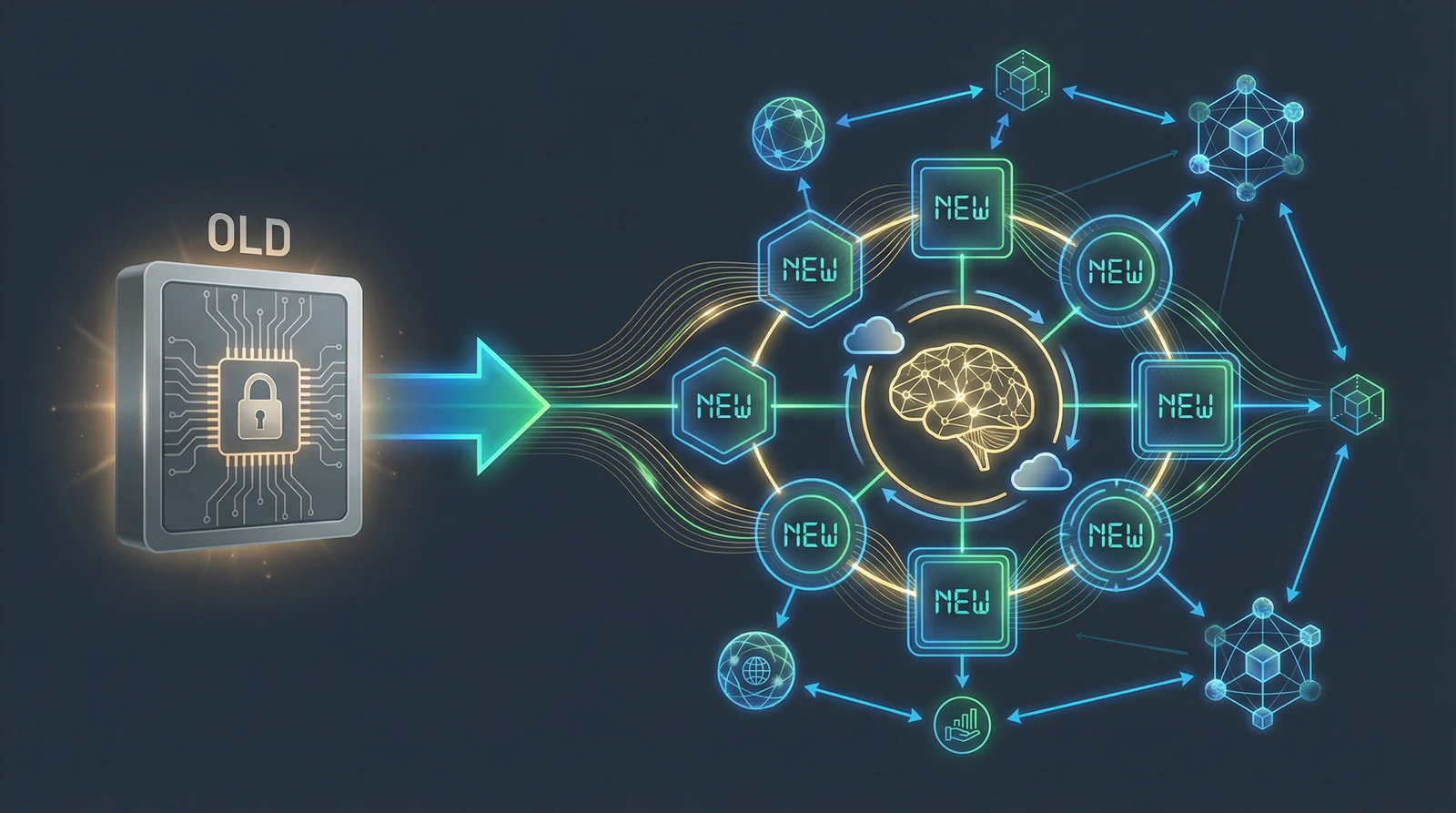

The Future: Where MDM Is Heading

The MDM landscape is undergoing a structural shift. Three trends are converging to reshape the entire category:

AI-native matching and governance. By 2026, MDM solutions are expected to use AI and predictive analytics to proactively anticipate and resolve data issues, reducing manual intervention by 60%. Agentic MDM — systems that autonomously harmonize, clean, and unify data across distributed systems — is moving from concept to reality. This changes the economics of building: ML-based matching (like Zingg) is approaching the quality of commercial solutions at a fraction of the cost.

Composable architecture. The monolithic MDM platform is giving way to best-of-breed components assembled to fit specific needs. Data mesh principles apply: domain teams own their data, with shared standards ensuring interoperability. MDM becomes a capability embedded across the architecture, not a separate system sitting to the side.

Cloud-native and real-time. Batch-oriented MDM with periodic synchronization is being replaced by event-driven architectures that reflect changes instantly. Over 80% of enterprises are expected to be on cloud-based MDM by 2026. This favors build approaches because cloud data platforms already provide the infrastructure — you're adding a matching layer, not deploying a separate system.

The trajectory points toward a world where the traditional MDM platform becomes less necessary, not more. When your data lakehouse has native entity resolution, when AI handles matching quality that used to require commercial engines, when governance is embedded in your data pipeline rather than sitting in a separate tool — the value proposition of a $200K/year MDM platform diminishes.

The Bottom Line

MDM as a concept is timeless. Every organization needs a single source of truth for its critical entities. That need isn't going away.

But MDM as an industry has overcomplicated a fundamentally straightforward problem. The vendors have every incentive to sell you a comprehensive platform — that's where the margins are. But if your actual need is "match these records and give me a golden record," you're paying for a cathedral when you need a chapel.

My take, after years of working with data at scale:

-

Start with the problem, not the solution. Define exactly what "golden record" means for your organization. Which entities? Which sources? What quality threshold?

-

Try the lightweight path first. Implement entity resolution with open-source tools on your existing platform. Zingg or Splink on Databricks/Spark. AWS Entity Resolution if you're in that ecosystem. See how far it gets you.

-

Add governance incrementally. Don't design a governance framework for problems you don't have yet. Start with basic stewardship for your highest-priority data domain, then expand.

-

Buy only what you can't build. If open-source matching isn't good enough, buy a matching engine — not a full MDM platform. If you need stewardship workflows, add that component. Compose your solution from parts, not wholesale.

-

Plan for the AI shift. The matching and merging capabilities that cost $200K/year in 2024 are becoming commoditized. Make decisions that age well as AI-driven data management matures.

The question isn't really "build or buy." It's "how much of this do I actually need?" For most organizations, the answer is less than the vendors would have you believe — and more achievable than the failure statistics suggest.

Start small. Stay focused. Build what you need. Buy what you can't. The golden record doesn't require a golden budget.

Sources:

- Gartner Peer Insights - MDM Solutions Reviews 2026

- Stibo Systems - Build vs. Buy MDM

- CluedIn - Past, Present, and Future of MDM

- Profisee - Evolution and Future of MDM

- Syncari - New Rules of MDM for CIOs 2025

- Zingg - Open-Source Entity Resolution

- Stibo Systems - Future of MDM: Trends in 2026

- Precedence Research - MDM Market Size to Hit $94.08B by 2035

- AtroCore - Open Source MDM Explained

- Verdantis - Most Critical Challenges in MDM Projects