I've been watching a quiet revolution unfold in how we think about workflows.

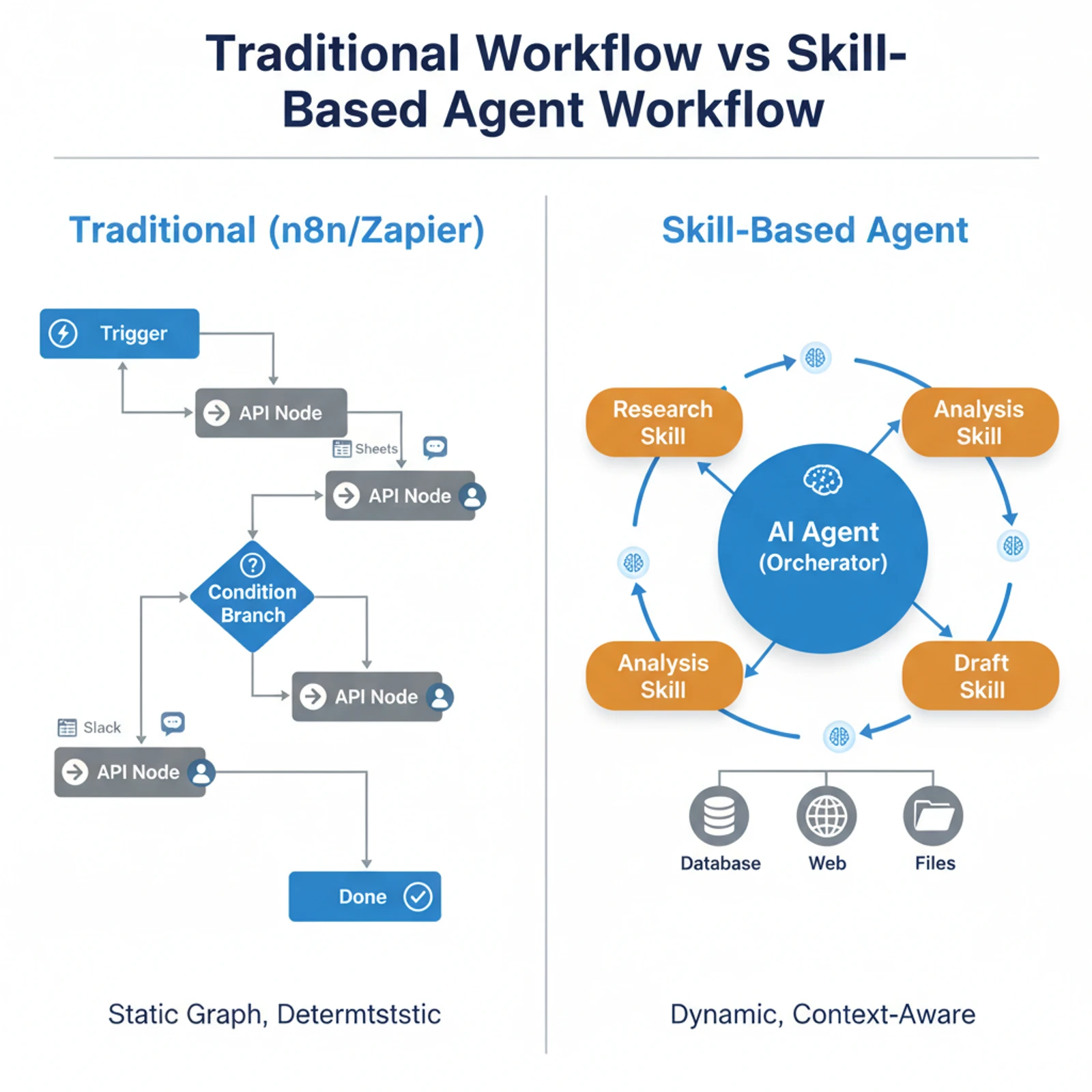

For the past decade, the dominant paradigm was visual: drag a trigger node, connect it to an action node, add some conditional branches, deploy. Tools like n8n, Zapier, and Make turned workflow automation into a product category worth billions. The mental model was simple — workflows are graphs, and nodes are API calls.

But something different is happening now. The workflow graph isn't disappearing, but the nodes are getting smarter. Much smarter. And the orchestrator connecting them is no longer a static execution engine — it's an AI agent that reasons about which steps to take, in what order, and whether the results make sense.

The building blocks of this new paradigm? Skills.

The Old Model: Workflows as Wiring Diagrams

Traditional workflow tools solve a real problem. Businesses have dozens of SaaS apps that don't talk to each other. Zapier's 6,000+ integrations exist because someone needed to move data from Google Sheets to Salesforce, or trigger a Slack message when a GitHub issue closes.

The architecture is straightforward:

Trigger → Action → Condition → Action → DoneEach node does one thing. The workflow engine evaluates conditions and routes data between nodes. It's deterministic, auditable, and reliable. For simple, repetitive tasks — syncing contacts between CRMs, sending notification emails, updating spreadsheets — this works beautifully.

But it breaks down when the problem gets complex.

Consider this scenario: "When a customer submits a support ticket, analyze the sentiment, check their account history, determine priority, draft a response, and escalate to a human if the issue is critical." In a traditional workflow builder, this requires:

- A sentiment analysis API node

- A database query node for account history

- A complex conditional branch for priority logic

- A template engine for response drafting

- An escalation routing node

- Error handling for each connection

You end up with a sprawling graph of 15-20 nodes, each configured separately, with brittle connections that break when any API changes. The workflow is technically automated, but maintaining it is a full-time job.

The New Model: Skills as Composable Capabilities

Here's the shift. Instead of wiring individual API calls into a graph, you package domain expertise into skills — self-contained bundles of instructions, scripts, and context that teach an AI agent how to do something well.

A skill isn't a single API call. It's a complete capability. An "article illustration" skill doesn't just call an image generation API — it analyzes content structure, identifies where visuals would help, selects the right generation approach based on whether the image needs text, optimizes file sizes, and converts formats. A "code review" skill doesn't just run a linter — it understands project conventions, traces execution paths, evaluates architectural decisions, and reports only high-confidence issues.

The critical difference: skills encode the judgment that traditional workflow nodes lack.

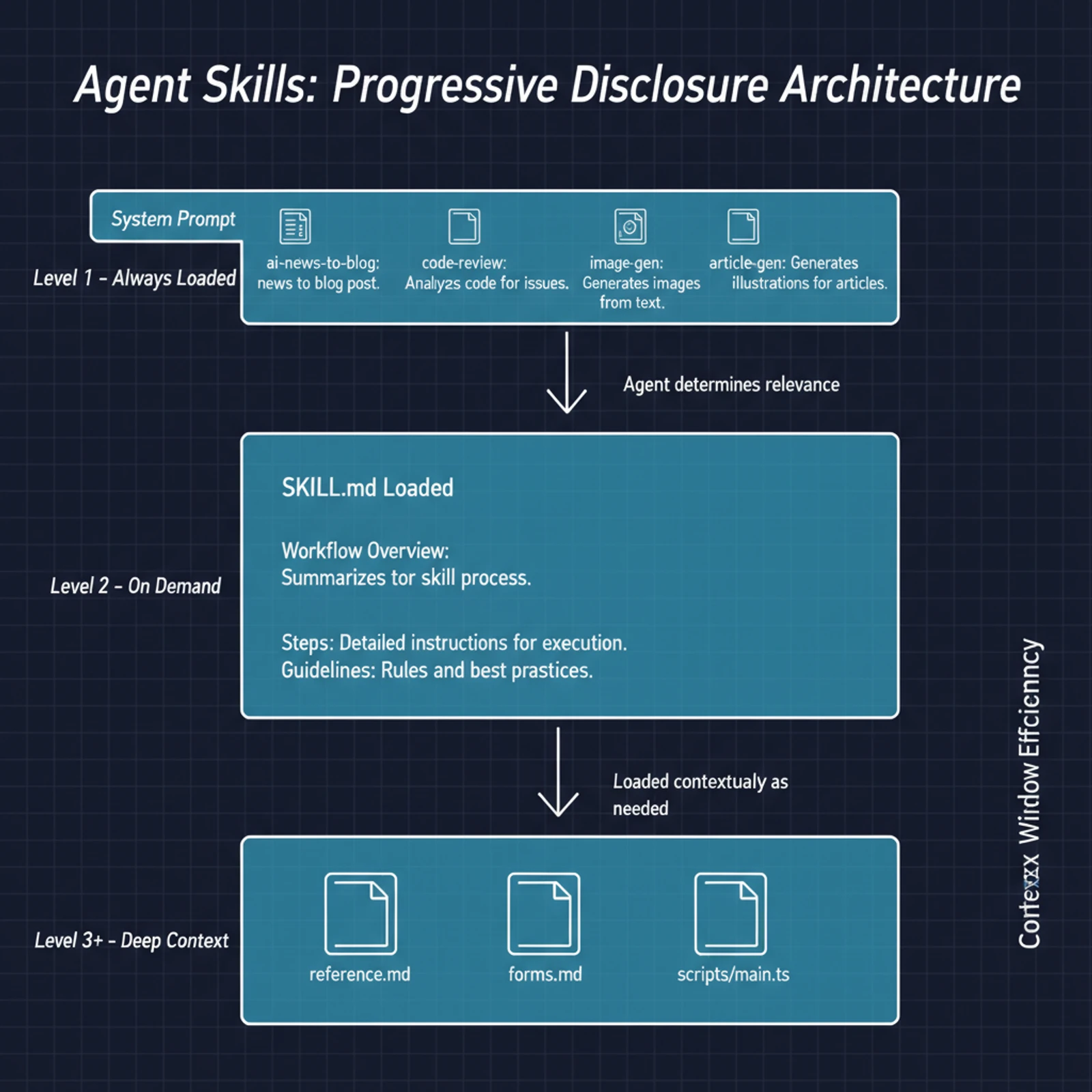

Anthropic formalized this in December 2025 when they published Agent Skills as an open standard. The structure is deliberately simple — a folder with a SKILL.md file containing instructions, plus optional scripts and reference files. But the design has a clever property called progressive disclosure:

| Level | What Loads | When |

|---|---|---|

| Level 1 | Skill name + description | Always (system prompt) |

| Level 2 | Full SKILL.md content | When the agent determines relevance |

| Level 3+ | Referenced files, scripts | On demand during execution |

This prevents the context window from bloating with irrelevant instructions. The agent sees a menu of capabilities, loads the details only when needed, and drills deeper as the task requires. It's the equivalent of a developer who knows what libraries are available but only reads the documentation when they need a specific function.

The open standard means skills work across Claude.ai, Claude Code, the Claude Agent SDK, and third-party platforms. Anthropic launched a directory with skills from Atlassian, Canva, Cloudflare, Figma, Notion, and others. Skills built for one environment port to another without modification.

MCP + Skills: The Two-Layer Stack

If skills are the "how-to" layer, the Model Context Protocol (MCP) is the "with-what" layer. Together, they form a stack that replaces the traditional workflow engine.

MCP, donated to the Linux Foundation in December 2025, is an open standard for connecting AI models to external tools and data sources. Think of it as USB-C for AI — a universal interface so that tools built once work with any MCP-compatible agent. As of early 2026, MCP has over 97 million monthly SDK downloads and 10,000+ active servers.

Here's how the two layers interact:

Traditional workflow: Trigger → API Node → Condition → API Node → Done

(static graph, deterministic execution)

Skill-based workflow: Agent receives goal

→ Loads relevant skill (progressive disclosure)

→ Skill references MCP tools (database, API, file system)

→ Agent reasons about results

→ Adapts next steps based on context

→ Repeats until goal is metA single skill can orchestrate multiple MCP servers. A customer support skill might use an MCP server for CRM access, another for knowledge base retrieval, and a third for ticket management. The skill defines the workflow logic; MCP provides the connections.

This is fundamentally different from traditional orchestration. In Zapier, the workflow graph is the product — you build it visually, and it executes exactly as drawn. In the skill-based model, the agent is the orchestrator. It reads the skill's instructions, uses the available MCP tools, and makes judgment calls about what to do next based on the actual data it encounters.

A Living Example: How This Blog Post Was Written

I'm not theorizing. This post was created using exactly this paradigm.

When I asked my agent to research and draft this article, it invoked the ai-news-to-blog skill — a structured workflow that defines five steps: research, analysis, drafting, illustration, and publishing. Each step includes specific instructions, quality criteria, and tool references.

The agent didn't follow a rigid graph. It:

- Loaded the skill and understood the overall workflow

- Ran parallel web searches across multiple sources (using web search tools)

- Fetched and analyzed key articles for deeper context (using web fetch tools)

- Read existing blog posts to match writing style and avoid content overlap

- Presented candidates and asked me to select a focus

- Drafted the post following the skill's structural guidelines

- Generated illustrations using an image generation skill (which itself orchestrates multiple providers based on cost optimization)

At each step, the agent made decisions. It chose which search queries would be most productive. It decided which articles to fetch in full versus skim from search results. It adapted the outline based on what the research actually revealed. A traditional workflow couldn't do this — the decision logic would need to be pre-programmed for every possible research outcome.

The skills involved here aren't monolithic programs. They're composable. The ai-news-to-blog skill references the article-illustrator skill for images, which in turn references the image-gen skill for actual generation. Each skill is independently useful, but they compose into a sophisticated pipeline when orchestrated by an agent.

What's Actually Working in Production

The theory is compelling. But is anyone actually running skill-based agent workflows in production?

The evidence is mixed but growing.

What works today:

Enterprise organizations are reporting 20-30% faster workflow cycles with agent-based orchestration, particularly in back-office operations. Specific successes include:

- HPE's Alfred Agent: Four specialized agents collaborate on operational analytics — one breaks queries into components, another runs SQL analysis, a third builds visualizations, and a fourth generates structured reports. The skill decomposition mirrors how a human analyst would approach the problem, but each agent has deep expertise in one step.

- Toyota's supply chain: Agents provide real-time vehicle delivery visibility, replacing a process that required navigating 50-100 mainframe screens. The agents are evolving toward autonomous delay detection and resolution drafting.

- Amazon's code modernization: Agent-coordinated workflows modernized thousands of legacy Java applications, with each agent handling a specific migration skill (dependency analysis, code transformation, test validation).

What's still struggling:

Deloitte's 2026 Tech Trends report is sobering. Only 14% of organizations have production-ready agent solutions. Gartner predicts 40% of agentic AI projects will be canceled by 2027. The primary failure mode isn't technical — it's organizational. Companies try to automate existing human workflows instead of redesigning processes for agent capabilities.

The concept of "agent washing" — vendors rebranding traditional automation as "agents" — is muddying the waters. Many so-called agentic platforms are just workflow builders with an LLM node added. The distinction matters: a workflow with an AI step is not the same as an AI agent that orchestrates skills.

The Coexistence Reality

Here's where I'll push back on the strongest version of the thesis that traditional workflow tools will simply die.

They won't. At least not all of them.

Traditional workflow tools excel at what they were designed for: deterministic, high-volume, low-complexity integrations. Moving a row from Google Sheets to Google Ads doesn't require an AI agent — it requires a reliable trigger and a stable API connection. Using an LLM for this would be slower, more expensive, and less reliable.

The realistic future is a spectrum:

| Task Type | Best Approach | Why |

|---|---|---|

| Simple data sync (Sheets → CRM) | Traditional workflow | Deterministic, cheap, reliable |

| Rule-based routing (ticket → team) | Traditional workflow | Fixed logic, auditable |

| Content generation + analysis | Skill-based agent | Requires judgment, context |

| Multi-system reasoning | Skill-based agent | Dynamic decision-making |

| Complex research + synthesis | Skill-based agent | Open-ended, adaptive |

| Regulated processes (audit trail) | Hybrid | Agent proposes, human approves |

The shift isn't binary replacement. It's that the center of gravity is moving. More of the valuable work — the work that requires judgment, context, and adaptation — will move to skill-based agent workflows. The commodity integrations will stay where they are.

What will change is how we think about building automation. Instead of asking "what's the trigger and what are the actions?" we'll ask "what skills does the agent need, and what tools should it have access to?" The unit of composition shifts from API calls to capabilities.

Best Practices for Building Skill-Based Workflows

If you're building in this paradigm, here's what the early practitioners are learning:

1. Design skills around capabilities, not tools. A skill called "generate-image" is too low-level. A skill called "illustrate-article" captures the full capability — analyzing content, choosing visual types, generating images, optimizing formats. The agent needs to understand what the skill accomplishes, not how it works internally.

2. Use progressive disclosure aggressively. Don't dump everything into SKILL.md. Put the overview in the main file, detailed reference in separate files, and scripts for deterministic operations. Anthropic's own documentation emphasizes this — monitor what the agent actually uses and trim what it doesn't.

3. Let skills compose, not inherit. Skills should reference other skills, not embed them. The ai-news-to-blog skill references article-illustrator which references image-gen. Each is independently testable and replaceable.

4. Keep MCP and skills separate. MCP servers provide raw tool access (read database, call API, write file). Skills provide the workflow logic (when to query, how to interpret results, what to do next). Mixing them creates brittle, non-portable workflows.

5. Start with the 80/20 rule. Build skills for the 20% of workflows that consume 80% of your time. The complex, judgment-heavy tasks are where skill-based agents deliver the most value. Leave the simple integrations to traditional tools.

6. Evaluate against real outcomes, not steps. Traditional workflows measure success by "did each node execute?" Skill-based workflows should be evaluated by "did the agent achieve the goal?" This requires defining success criteria in the skill itself.

Looking Ahead

Anthropic's vision goes further than composable skills — they envision agents that create and refine their own skills autonomously, codifying learned patterns into reusable capabilities. We're not there yet, but the trajectory is clear.

The pieces are falling into place. MCP provides universal tool access. Skills provide composable expertise. AI agents provide intelligent orchestration. The open standards ensure portability. And the agent frameworks (Claude Agent SDK, LangGraph, CrewAI) provide the scaffolding for production deployment.

Traditional workflow builders aren't dying tomorrow. But the question has shifted. It's no longer "how do I wire these APIs together?" It's "what does my agent need to know, and what tools does it need access to?"

That's a fundamentally different way to think about automation. And the teams that internalize it first will build workflows that adapt, improve, and compose in ways that no static graph ever could.

Sources:

- Equipping Agents for the Real World with Agent Skills — Anthropic

- Skills Explained: How Skills Compares to Prompts, Projects, MCP, and Subagents — Claude

- The Agentic Reality Check: Preparing for a Silicon-Based Workforce — Deloitte Insights

- OpenAI Agent Builder vs. n8n and Zapier — Stormy AI

- Agentic AI with Andrew Ng — DeepLearning.AI

- Agent Skills: Anthropic's Next Bid to Define AI Standards — The New Stack

- Claude Skills vs MCP: The 2026 Guide to Agentic Architecture — CometAPI

- Gartner Predicts 40% of Enterprise Apps Will Feature AI Agents by 2026